Client

OFFF Festival

Year

2025

Type of sector

Arts & Culture, Technology

Tag

AI Research, Installations

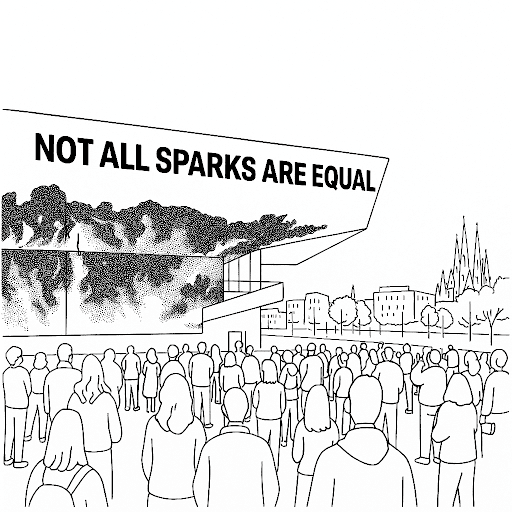

Fire dances across the human timeline, from the earliest hearths to last New Year’s fireworks. But in the eyes of some AI systems, the flames of celebration cannot be distinguished from the fires of oppression, a dancing ember is a dancing ember, pixel by pixel, data point by data point. This flattening of nuance is at the heart of “Not All Sparks Are Equal,” a new video mapping installation that explores how technological perception often strips away the vital context that gives human experience its meaning.

In Catalonia, fire has two dramatically different public faces. On one side stands the correfoc, a beloved tradition dating back to medieval times where dancers dressed as devils carry spinning, sparking fireworks through crowded streets. These “fire-runs” represent a collective reclamation of public space through joyful cultural celebration, embodying community strength and Catalan identity. The tradition, which grew in popularity after the fall of Franco’s dictatorship as part of Catalonia’s cultural revival, sees participants of all ages dance beneath showers of harmless sparks in a carnival atmosphere.

On the other side, stand police charges against protesters, where tear gas canisters, flash grenades, and rubber bullets transform public squares into zones of state-enforced order that tend to end up with garbage containers in flames. Here, fire becomes not a symbol of communal joy but a tool of control and suppression, and in the last 5 years Catalonia has seen a lot of examples of this use of force from the government.

To modern surveillance algorithms, however, these profoundly different scenarios register as identical: chaotic crowds, heat signatures, unpredictable movements, and bright flashes against the night. The algorithm, blind to context, sees only potential disruption — a threat to be managed.

Through this project, we’re exploring how algorithms flatten complex realities into simplistic clean-cut binary categories, safe/dangerous, orderly/chaotic, permitted/prohibited.

A correfoc dancer and a protester fleeing tear gas may generate identical heat signatures and movement patterns to an algorithm, but the gulf between these experiences could not be wider, one celebrates cultural identity while the other fights for rights.

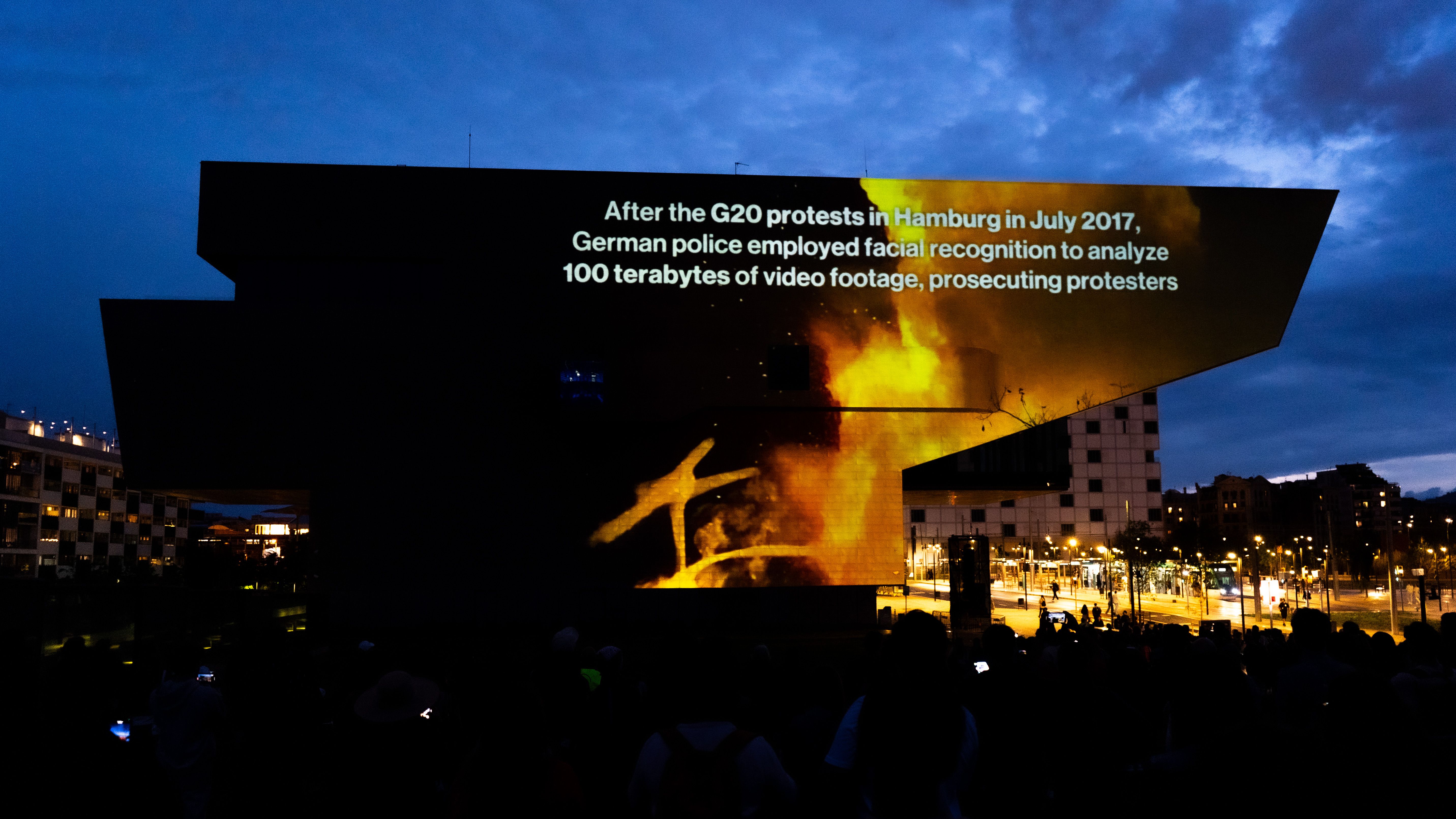

This algorithmic blindness isn’t accidental but structural. Developed within Silicon Valley’s monocultural framework and trained on Western datasets, these systems impose a binary worldview that sees order and chaos as opposing forces rather than interconnected elements of social expression. The projection installation highlights this by simulating algorithmic readings over authentic footage: “ERROR: 98% match — Riot detected (confidence: 0.92)” flashes over scenes of correfoc participants, while identical confidence scores appear over footage of police charges.

In contrast to those predictive systems, we have several examples of cosmologies that view fire as a transformative force connecting multiple worlds, neither inherently dangerous nor inherently safe, but deeply contextual. Worldviews embrace complexity, recognizing that the same element can simultaneously hold different meanings depending on its context and intention, but algorithms, trained on datasets that prioritize simple perspectives and binary categorizations, may work well on targeting factory optimization but terribly at grasping these nuances.

When Speed Erases Context

The philosophical underpinnings of “Not All Sparks Are Equal” draw from the concept of “dromology”, the study of how speed transforms society. The idea argues that our contemporary obsession with rapid processing privileges velocity over depth, resulting in a flattening of meaning. In algorithmic surveillance, this “dromological” effect transforms the expansive space of human expression into streams of data points processed at inhuman speeds.

Speed is not just about going faster, it’s about what gets lost in the acceleration. When algorithms process visual information at rates beyond human comprehension, context becomes the first casualty. This erasure is not merely a technical limitation but a political reality. As surveillance technologies proliferate across public spaces, cultural expressions increasingly must pass through algorithmic gatekeepers that reduce participants to biometric data, heat signatures, and potential security risks. Festivals, protests, and cultural gatherings, the very spaces where communities express their collective identity, become sites of automated categorization and control. This projects points out the inherent collapse of meaning that AI technologies bring with them.

The stakes of this algorithmic blindness extend far beyond philosophical debate. In 2022, Amnesty International documented at least 50 incidents across Europe where police deployed surveillance technology during peaceful demonstrations. After the G20 protests in Hamburg in 2017, German police analyzed 100 terabytes of video footage using facial recognition algorithms to identify and prosecute protesters. In the UK, police have used live facial recognition technology at events like the Notting Hill Carnival, scanning approximately 100,000 attendees.

From misrecognition to resistance

The deeper threat here isn’t just technical misclassification, it’s cultural submission disguised as protection. If algorithms can’t tell the difference between a festival and a riot, the danger isn’t just in the error rate. The danger is in allowing these systems to govern what is permitted in public space at all. We should not have to survive our own festivities. Celebrations like the correfoc shouldn’t be allowed only when they can pass through a lens of biometric legibility or algorithmic permission. What’s at stake is not merely data accuracy, it’s the right to occupy public space with ambiguity, with contradiction, with unruly joy that doesn’t have to justify itself to a predictive model.

This isn’t a call for better training datasets. It’s a call to draw lines: some things do not belong under the rule of machine classification. Cultural spaces, protests, parades, rituals, these are not UX flows or security scenarios to optimize. They are messy, vital, human. If algorithms can’t understand them, then maybe they don’t belong there at all.

As correfocs once danced defiantly against Franco’s bans, we must now resist their quiet algorithmic domestication. This time not through laws, but through software. Through silent flags of permission. Through the bureaucratized language of safety. If we are not vigilant, the fires of joy may again be mistaken for threats, only this time, not by men in grey uniforms, but by invisible systems that never sleep, and never ask why.

Let’s not retrofit culture to fit surveillance. Let’s keep culture wild enough to break it.

Let’s let some sparks stay unclassified.

Latest screenings and mappings:

1st of May 2025 Screening at AIxDesign Amsterdam — Netherlands

May 7–10th, 2025 Mapping at Design Museum of Barcelona — Spain

24 Jul 2025 Screening at AIxD Film Festival — Apollo Kino Aachen Aachen — Germany